On a tense evening in the court of National Basketball Association (NBA), a player drives towards the basket. He hesitates for a split second. Should he shoot or should he pass? Millions of fans may debate the choice, but in reality there is no way to replay the same moment with both decisions. In the world of science, this simple question is known as a counterfactual: what would have happened if things had gone differently?

Now, researchers from Nagoya University in Japan have unveiled a new artificial intelligence model that tackles this challenge. The study, led by Keisuke Fujii and published in IEEE Transactions on Neural Networks and Learning Systems in February 2024, introduces a framework that can simulate alternative futures in complex multiagent systems. The research “Estimating Counterfactual Treatment Outcomes Over Time in Complex Multiagent Scenarios” could change how we evaluate interventions in autonomous driving, biological experiments, and competitive sports.

At its heart, the project addresses one of science’s most intriguing puzzles: how to measure the impact of actions that were never taken.

The power of counterfactual thinking

The notion of counterfactuals has fascinated philosophers and scientists for centuries. What if Archimedes had access to modern tools? What if different policies had been applied during the pandemic? In daily life, we play out counterfactuals constantly, from second-guessing exam answers to wondering about alternative career choices.

In science and engineering, however, counterfactuals are notoriously difficult to study. Real-world systems are full of interacting agents: cars on a road, animals in a group, or players on a court. Their behaviours are dynamic, interdependent, and shaped by hidden factors. Replicating the exact same situation twice, with and without a given intervention, is nearly impossible.

This is where artificial intelligence steps in. By building models that can learn from past interactions and predict future outcomes, researchers can begin to answer the great “what ifs” of science.

Building a network of possibilities

The Nagoya University team developed a method they call the Theory-based and Graph Variational Counterfactual Recurrent Network (TGV-CRN). While the acronym sounds dense, its purpose is straightforward: to create an interpretable AI model that can forecast how interventions would play out in systems with many agents.

The framework combines two powerful approaches. First, it uses graph variational recurrent neural networks (GVRNNs) to capture local interactions between agents, such as how cars respond to nearby vehicles or how basketball players defend against one another. Second, it integrates theory-based computations, embedding domain knowledge like physical laws or biological constraints. Together, this dual strategy allows the system to model both the micro-level interactions and the broader principles guiding behaviour.

Unlike earlier models, TGV-CRN does not merely generate a single prediction. It can visualise entire trajectories over time, showing how interventions ripple across a system. In the case of a self-driving car, for instance, the AI can simulate whether a human intervention at a critical moment leads to safer driving distances or unnecessary braking.

Testing interventions in self-driving cars

One of the most compelling demonstrations comes from autonomous driving. Self-driving cars rely on artificial intelligence to interpret complex road environments. Yet, despite advances, human drivers are often needed to intervene in risky scenarios. How can we test whether such interventions actually improve safety?

The researchers used the CARLA simulator, a virtual environment for autonomous vehicles, to model these scenarios. By simulating both intervention and non-intervention cases, the TGV-CRN was able to predict the effect on driving distance without collisions.

The results were striking. Compared with baseline models, the new system achieved lower prediction errors in estimating both outcomes and covariates. Importantly, it could pinpoint the circumstances under which interventions were most effective. For example, the model revealed that human interventions in certain high-risk conditions led to significantly safer trajectories, while in other cases the vehicle’s own algorithms were sufficient.

In real-world applications, this capability could help engineers refine the transition between human control and automated driving, paving the way for safer autonomous transport.

This work lets us test ‘what if’ scenarios in driving, biology, and sports without running new experiments each time.

-Prof. Keisuke Fujii

From swarms to science: Modelling animal behaviour

Beyond cars and roads, the team applied their model to biological systems. Animal groups such as flocks of birds or schools of fish are prime examples of multiagent systems. Researchers often intervene in such systems to test behavioural responses, but it is rarely possible to repeat experiments with every possible intervention timing.

Using a synthetic dataset known as the Boid model, the researchers simulated flocking behaviours and applied interventions that changed the animals’ recognition patterns. The model successfully predicted how the group’s angular momentum shifted depending on the intervention.

The significance here is not just mathematical. In behavioural science, running large numbers of experiments can be time-consuming and may raise ethical concerns, especially when animals are involved. A reliable counterfactual prediction framework could reduce the need for repeated testing, offering insights into when interventions are most efficient and humane.

Decoding decision-making in basketball

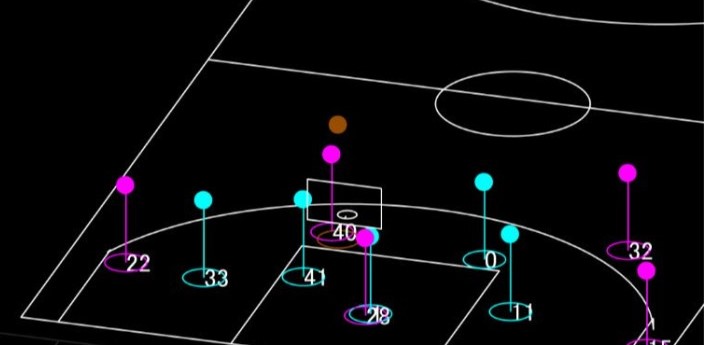

Perhaps the most eye-catching demonstration came from the world of sport. The researchers analysed tracking data from over 47,000 offensive plays in the NBA. Using player and ball trajectories, they tested counterfactual scenarios: what if the player with the ball had passed instead of shooting? What if a different teammate had taken the shot?

The TGV-CRN generated realistic counterfactual predictions, even capturing the movement of defenders chasing alternative passes. In one example, the AI predicted that an extra pass could have created a more promising scoring opportunity than the actual shot taken.

This has huge implications for sports analytics. Coaches and players are already embracing data to refine strategies, but most systems can only evaluate what did happen, not what could have happened. By opening the door to counterfactuals, the model offers a way to assess decision-making skills and uncover hidden opportunities in competitive games.

Balancing data-driven learning with theory

A key strength of the model lies in its hybrid design. Many AI systems rely solely on data, risking overfitting or missing subtle physical constraints. Others lean heavily on theory but fail to capture the variability of real-world interactions.

By combining both, the Nagoya team’s framework improves interpretability. For instance, in basketball simulations, the AI not only predicted player movements but also ensured that passes and ball trajectories obeyed the laws of physics. In autonomous driving, it respected safety rules like collision avoidance.

This balance makes the predictions not just accurate but plausible, a crucial factor when decisions affect safety or scientific outcomes.

Implications for society and technology

The broader impact of this research extends across disciplines. In transportation, it could accelerate the safe deployment of self-driving vehicles by identifying when human intervention truly makes a difference. In biology, it could streamline experiments and reduce animal use. In sports, it offers a new frontier in performance analysis.

There are also future possibilities. The same principles could be applied to healthcare, testing how different treatment strategies might affect patients over time. In public policy, counterfactual models could evaluate the effects of interventions such as lockdowns, educational reforms, or climate measures, providing decision-makers with evidence-based insights.

Challenges and the road ahead

Despite its promise, the research acknowledges limitations. Counterfactual prediction remains a highly complex task, especially when hidden confounders influence outcomes. The team’s method attempts to capture these through representation learning, but no model can perfectly reconstruct unobserved realities.

Moreover, the success of such systems depends on the quality of data. In sports, player tracking is highly detailed, but in other fields such as healthcare or policy, data may be patchy or biased. Ensuring fairness and robustness will be critical as the method moves towards broader applications.

Still, the achievement is significant. By bridging data-driven AI with domain knowledge, the researchers have charted a path towards more interpretable and practical counterfactual modelling.

The next time you watch a tense game or sit in a self-driving car, remember: science is getting closer to answering the oldest question of all, what if?

Reference

Fujii, K., Takeuchi, K., Kuribayashi, A., Takeishi, N., Kawahara, Y., & Takeda, K. (2024). Estimating counterfactual treatment outcomes over time in complex multiagent scenarios. IEEE Transactions on Neural Networks and Learning Systems. Advance online publication. https://doi.org/10.1109/TNNLS.2024.3361166