Artificial intelligence is steadily becoming embedded in higher education, promising more personalised learning, real time feedback and data driven decision making. Universities around the world are investing in analytics-based systems that can track engagement, predict performance and intervene when students appear to struggle. These tools are often promoted as neutral, efficient and beneficial. Yet for students, the experience of learning under artificial intelligence may feel far more complex.

A recent study led by Bingyi Han and colleagues, published in the International Journal of Artificial Intelligence in Education, asks a critical but underexplored question. How do students imagine themselves the ethical and pedagogical consequences of artificial intelligence in higher education before such systems become fully normalized? The research, titled “Students’ perceptions: Exploring the interplay of ethical and pedagogical impacts for adopting AI in higher education”, was conducted at the University of Melbourne and offers a rare student centered perspective on the future of AI driven education.

Rather than focusing on algorithms or system performance, the study foregrounds how artificial intelligence may reshape learner autonomy, classroom environments, social relationships and the very meaning of learning. Its findings suggest that the impact of AI in education cannot be understood through technical metrics alone. It must also be examined through the lived, emotional and ethical experiences of students.

A student centred approach to ai ethics

Most debates around artificial intelligence in education focus on familiar ethical concerns such as data privacy, algorithmic bias and transparency. While these issues are undeniably important, they often overlook how AI systems interact with pedagogy and student behaviour in everyday learning contexts. Han and colleagues argue that this narrow focus risks increasing student vulnerability rather than protecting it.

To address this gap, the researchers adopted a student centred ethical lens. They positioned students not merely as data sources or system users, but as active participants whose perceptions, fears and expectations matter. This shift is significant. Students are the primary subjects of educational data analytics, yet their voices are rarely central in decisions about how AI tools are designed and deployed.

The study challenges the assumption that ethical compliance alone is sufficient. Meeting legal and institutional standards does not guarantee that AI systems will support meaningful learning or maintain student trust. Ethical AI in education, the authors suggest, must also account for autonomy, well being, agency and social relationships within learning environments.

As AI becomes more embedded in education, students are increasingly shaped by systems they did not choose or design. This research shows that ethical concerns about AI in learning are not just technical issues — they fundamentally affect how students experience autonomy, relationships, and what it means to learn.

-Bingyi Han

Imagining future classrooms through storytelling

One of the most distinctive aspects of the research is its use of the Story Completion Method, a qualitative and speculative research approach drawn from psychology and design studies. Because many students have limited direct experience with advanced AI driven educational tools, the researchers did not ask participants to evaluate existing systems. Instead, they invited them to imagine plausible future classrooms shaped by artificial intelligence.

Seventy-one students from Australia and China were presented with carefully designed story prompts describing university settings where AI systems monitor behaviour, analyse emotions and deliver personalised learning interventions. Participants were asked to complete these stories by describing what happens next and how students and teachers respond.

This speculative approach allowed participants to explore ethical and pedagogical implications without requiring technical expertise. It also enabled the researchers to examine how students anticipate changes to learning autonomy, classroom dynamics and relationships under conditions of AI monitoring and automation.

Autonomy under algorithmic guidance

One of the most prominent themes to emerge from the study is concern about learner autonomy. Many students imagined AI driven systems that strongly influence what they study, how they study and when they receive feedback. While some participants welcomed guidance and structure, others perceived these interventions as restrictive or even manipulative.

Students described scenarios in which personalised learning pathways became passive learning routes, shaped by algorithmic assumptions about what constitutes a good learner. Some feared that curiosity driven exploration would be replaced by optimisation for performance metrics such as grades, engagement scores or predicted outcomes.

This perceived loss of autonomy was closely linked to concerns about over reliance on AI. Participants worried that constant guidance and automated feedback might discourage independent thinking, problem solving and self regulation. Learning, in these narratives, risked becoming something that happens to students rather than something they actively shape.

The emotional weight of being watched

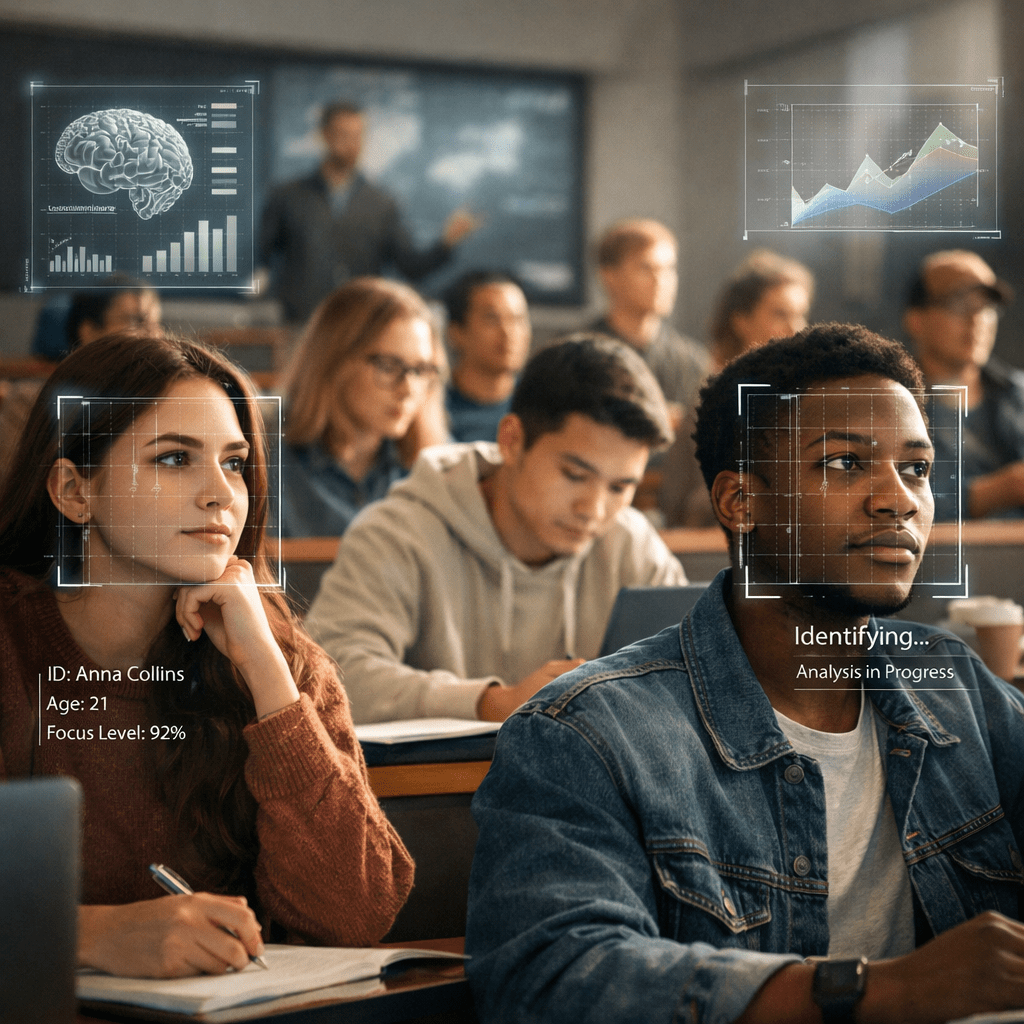

Another powerful theme concerns the experience of surveillance. In many imagined classrooms, AI systems continuously tracked facial expressions, eye movement, speech patterns and engagement levels. Students frequently described these environments as intense, anxiety inducing and emotionally draining.

The sense of being watched altered how students behaved. Participants imagined themselves performing engagement rather than genuinely engaging, speaking to satisfy the system rather than to explore ideas. Some described fear of making mistakes, worrying that every lapse in concentration would be recorded and evaluated.

These narratives reveal how AI monitoring may disrupt concentration and undermine psychological safety. While some students acknowledged that monitoring could increase participation, many felt that this came at the cost of authenticity. Learning environments became places of compliance rather than curiosity.

When efficiency competes with enjoyment

Artificial intelligence is often promoted as a tool for improving efficiency in education. The study shows that students recognise these benefits, particularly in relation to feedback, self reflection and support for struggling learners. Many participants believed AI systems could help identify weaknesses, personalise study plans and ensure that no student is overlooked.

However, these advantages were frequently weighed against concerns about the quality of the learning experience. Students worried that AI driven education prioritises outcomes over process, turning learning into a task oriented activity focused on measurable results rather than intellectual growth.

Several participants described a loss of enjoyment, describing learning as over digitalised, transactional and emotionally flattened. The joy of uncertainty, exploration and informal discussion was seen as vulnerable to automation. In this sense, the study highlights a tension between efficiency and educational richness that sits at the heart of AI adoption.

Changing relationships in ai enhanced education

Beyond individual experiences, the research explores how AI systems may reshape social and pedagogical relationships. Many students anticipated reduced interaction with peers, particularly informal conversations that support collaborative learning and social belonging.

In classrooms governed by monitoring and evaluation, students imagined becoming more cautious, less expressive and more competitive. Peer learning, once a source of mutual support, risked being replaced by comparison and ranking. These changes were not limited to student relationships.

Participants also described shifts in relationships with teachers. While some welcomed AI systems that could alert instructors to student difficulties, others feared that automation would make interactions more impersonal. Teachers, in these narratives, risked becoming managers of dashboards rather than mentors engaged in dialogue.

The interplay of ethical and pedagogical impacts

A key contribution of the study lies in its analysis of how these impacts interact rather than occur in isolation. The researchers show that AI monitoring influences behaviour, which in turn shapes learning environments, social relationships and perceptions of autonomy. Customisation and personalisation can simultaneously support self reflection and constrain independence.

This interconnectedness reflects the socio-technical nature of educational technology. AI systems do not simply add new features to existing classrooms. They actively reshape practices, expectations and values. As such, their ethical implications cannot be separated from their pedagogical effects.

The findings align with broader scholarship on technology adoption, which emphasises that tools and social practices evolve together. In education, this means that introducing AI systems without careful consideration of student experience may lead to unintended and lasting consequences.

Reference

Han, B., Nawaz, S., Buchanan, G., & McKay, D. (2025). Students’ perceptions: Exploring the interplay of ethical and pedagogical impacts for adopting AI in higher education. International Journal of Artificial Intelligence in Education, 35, 1887–1912. https://doi.org/10.1007/s40593-024-00456-4